Interactive water simulation in Unity

Around 2018 I came across a blog post by the game studio Campo Santo for an upcoming game called In The Valley of The gods. The post showcased some amazing water tech that they were developing. It looked awesome. This inspired me to try and create my own water tech and so I began my journey researching and experimenting.

I used the techniques mentioned in their blog post as a starting point and continued researching to find tips and tricks other game companies had come up with.

By the time I had finished building my water tech I had incorporated the following:

- Water physics using shallow water equations

- Two way interaction of rigidbodies with water

- Signed Distance Function 3D textures for rendering shadows on the water and for precise water collision

- Custom lighting engine

Water Simulation

The starting point was to figure out how to calculate the actual water simulation. Camp Santo’s post mentioned they implemented a GPU-based simulation using a “shallow-water” approximation. Further investigation returned various papers and thesis based on Shallow Water Equations each with varying implementations. The one I chose to implement was based on the pipe method.

With a shallow water equation only the surface of the water is modelled. This is fast and efficient, especially if implemented on the GPU, but comes with the limitation of not being able to simulate breaking waves.

The algorithm in layman terms is as follows. The surface of the water is divided into a grid, with each grid cell representing a column of water (height). Each of these columns are connected to its four neighbours, north, west, south and east. As water is added to a column, the hydrostatic water pressure will change and result in either excess water being flooding into the neighbouring pipes or neighbouring pipes flooding into the column.

More information on the algorithm can be found here https://web.archive.org/web/20201101141245/https://tutcris.tut.fi/portal/files/4312220/kellomaki_1354.pdf

It so happened that previously I had stumbled upon a blog by a developer who goes by the name Scrawk who had implemented a terrain erosion system on the GPU https://github.com/Scrawk/Interactive-Erosion. From his work I learned how to use Blit() to process data on the GPU and it was a great starting point. I should mention I could have also used compute shaders for this task but I was trying to keep compatibility with older mobile GPU.

Having developed the bare bones water simulation the next task was to tackle the interaction. Campo Santo mentioned they achieved interaction by using signed distance functions (SDF).

SDF

An SDF stands for a signed distance function, which is a function that returns a signed value specifying a distance of how far a point in space is from the function.

With this little bit of information we can make assumptions about an environment. Traditionally to display 3D graphics we would use a shader to render a mesh that an artist has made or that we have procedurally coded.

But in this case we do things a little differently. Instead we raymarch for each fragment in a pixel shader along a viewing ray. This shader is attached to a simple quad or camera. As we march from the starting position we calculate how far to the nearest point on the SDF is by calling the SDF function for the shape. This bit of information allows us to make an assumption on how far we can subsequently march ensuring we do not tunnel through. Then depending on the results after N amount of steps or if a collision is detected we can colour the pixel based on our results.

For example, imagine a sphere at a given position and radius, we can determine how near to the surface of the sphere we are from any point in space. Therefore, we can step along the viewing ray by the distance returned from the SDF function. This distance is important to ensure we don’t tunnel through the SDF. In a sense raymarching like this is an optimization. When the distance returned is below a threshold, we consider it a collision with the surface. We can then measure how far along the ray we stepped and render the pixel for that ray by using the length to shade the pixel. If no collision was found we step for a max of N steps.

We can render various primitive shapes like this and even combine them to build even more complex objects.

Inigo Quilez who co-developed the site ShaderToy is “the guy” for every and anything related to SDF. On his site he lists all the various functions for calculating the SDF of different shapes. You can even join SDF functions to create composite functions. There are plenty of examples on ShaderToy showcasing what is possible.

That being said, while the examples are cool they don’t really work when we have a specific shape we want to render. Trying to build a complex character in a game from primitives would be very time consuming. Also, the final function would be very expensive as we would need to calculate the min sum of probably hundreds of SDF that comprise a model.

The answer to this problem is to prebake the data. To do this I created a 3D grid in the Unity Editor with each grid position corresponding to a position in a 3D texture. Then I would iterate through each grid point and calculate the distance to the closest point to the mesh by iterating over each triangle in the mesh, then call the SDF function for that triangle and finally store this data in the 3D texture.

Some of the more complicated items such as the galleon ship required a 100×100 grid and the ship itself was made up of 12,000 triangles. The problem was this was really slow to bake (hours). So the next step was to move all this code off the CPU and onto the GPU. After creating a compute shader in Unity I was able to bake the SDF to texture in a matter of minutes!

As this SDF data is stored in a 3D texture it’s then easy to feed into the water simulation shaders.

Within the shaders I use the 3D textures for two purposes. The first purpose is for collisions and the other for rendering shadows on the water surface.

Collision

Calculating the collision required working out how much water should be displaced from its column if an object was occupying the area. To do this I used SDF data to march a ray from the water surface directly down and another from the bottom directly up. Then any difference between the water height and these distances are used to update the displacement of water in the simulation. One of the nice things is the collision box using the SDF is a lot more detailed than using primitives.

You may remember at the beginning of this article I said that I made a two way water collision system. Therefore not only do the objects move the water, but the water can move the objects.

This is where I thought outside of the box. You see the problem is that GPUs are designed for having data pushed into them but they are not so good at retrieving data out of. When trying to transfer data from the GPU to the CPU you stall the graphics pipeline which causes a significant performance spike.

My solution to this problem was to avoid the GPU entirely. At the time when I was researching all this tech, Unity had come out with their burst and job systems that gave a big performance increase to code running on the CPU through threading and optimised memory layout.

What I did was to port over the water simulation to Unity jobs but with a lower grid resolution to help minimise the performance gap. The rigidbodies on the CPU then use the velocity data from this CPU simulation to add force so that they are pushed by the water. The GPU and CPU water simulations operate independently but with the same input data. Because both simulations are dampened over time they remain fairly synced and the effect works well.

Rendering

The final step for my water tech was to try to render the water data as realistically as possible. The secret to making water look good is the lighting and how it interacts with the water. First I generated the normals by sampling the heightmap. Fresnel was then added in combination with a cube map. This results in the water looking reflective from some angles and transparent from others. Refraction is added by using a GrabTexture together with water surface normals.

I then implemented a fake subsurface scattering using the technique described here https://www.fxguide.com/fxfeatured/assassins-creed-iii-the-tech-behind-or-beneath-the-action/

Shadows rendering on top of the water surface was another big feature. The Campo Santo blog post mentioned it was a challenge as the built in Unity pipeline did not support shadows on transparent surfaces. These days if you use the URP render pipeline in Unity there is no longer this limitation. Also, I recently discovered that the ability to sample the inbuilt shadow map is now accessible when using the built in pipeline.

But back then every forum post suggested making your own shadows.

My solution was to make use of the SDF textures. I raymarched from the water surface, along the light direction and tested for collisions with any of the SDF items.. If a collision was detected it meant the water surface was being occluded. One of the benefits with using SDF for a shadow system was that it’s easy to incorporate a penumbral effect so that shadows have softer edges the further they fade out!

The final step for rendering water was to add caustics. The easy way of rendering caustics is to have an animated texture that you project into the scene. But I wanted real time caustics that are calculated from the water surface. By far the best implementation I found was in this WebGL demo by Evan Wallace https://medium.com/@evanwallace/rendering-realtime-caustics-in-webgl-2a99a29a0b2c

The reason this one is the best is because the algorithm modifies the verts based on the light rays and then uses ddx and ddy in the fragment shader to calculate a rough area of the triangle to either brighten or darken. This creates really interesting formations and patterns that many of the other caustic algorithms lack.

I then set up a camera dedicated to rendering the caustics to a texture where I then applied some blur filtering and chromatic aberration.

Then for all the items and tiles I created a surface shader that sampled this caustic texture using their pixel world position, then checked against the height field to ensure the pixel is under the water surface, and finally compared the dot product of their normal and an up vector to map where the caustic was to be applied.

Physics

The buoyancy physics comes directly from a post by Habrador

Art

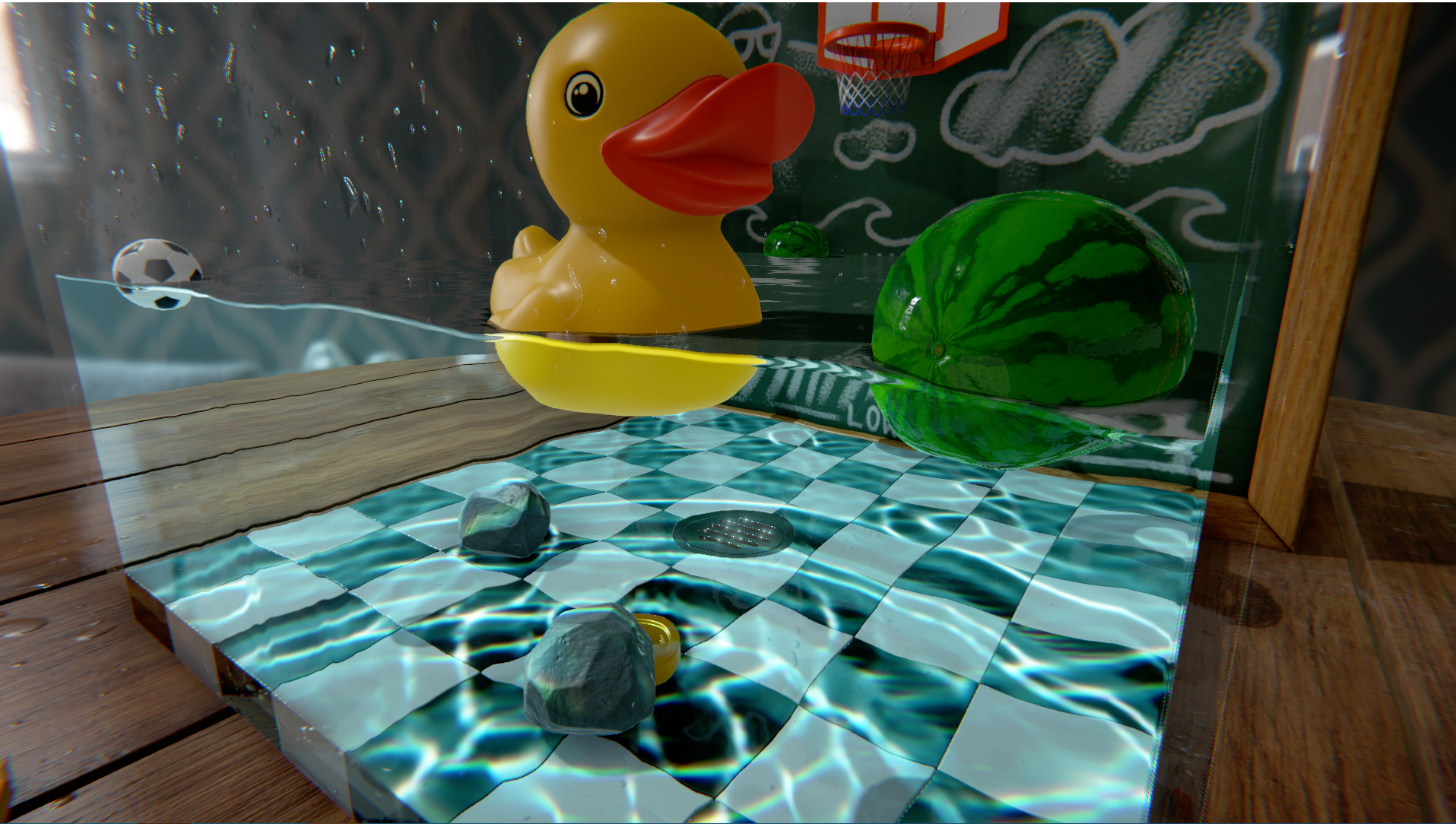

Lastly I should point out that even with all this tech it requires a great artist to get the most out of it. Ryan Bargiel did an outstanding job creating the environment, assets, tweaking all the shaders and bugging me for feature requests. Even 4 years later I still think this water tech demo stands up well.